Solr is a crucial component in Sitecore xDB setup, which simplifies search indexes management. With Solr you don’t need to store indexes directly on CD or CM servers, as you typically do with Lucene. Because there are many dependencies to those indexes in Sitecore (content search, analytics, etc), it’s necessary to ensure high availability and performance, so Solr won’t be the bottleneck in large-scale production Sitecore. There are two possible ways to setup multi-instance Solr production environment for Sitecore: Solr Master-Slave replication and SolrCloud, what are the benefits and disadvantages of each?

- Solr Master-Slave Replication

- Relatively simple to setup

- Automatic search index replication

- Automatic fail-over for index search (requires load balancer)

- No fail-over for index update

- Improved search performance

- Legacy approach, to be replaced with SolrCloud

- SolrCloud

- More complicated setup, requires additional ZooKeeper servers

- Automatic search index replication

- Automatic failover for both index search and update

- Improved search performance

- New way, supported experimentally from Sitecore 8.2, or custom solution (requires load balancer)

Sitecore and Solr Master-Slave Replication

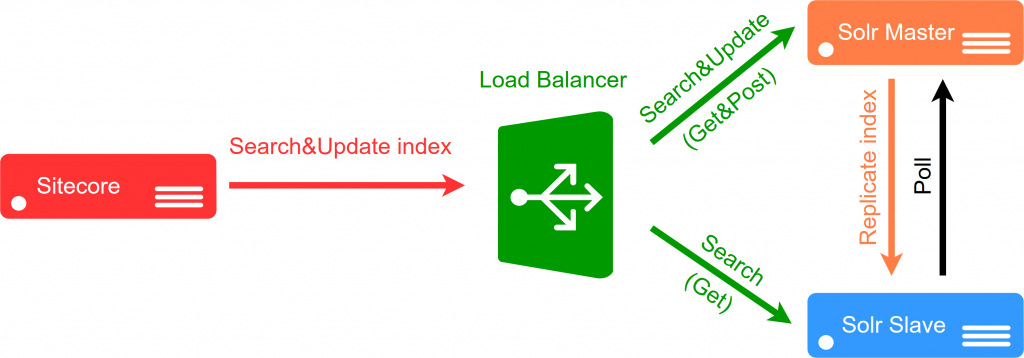

To create minimal production Solr environment we need at least 2 Solr servers: Master and Slave. The role of the Master instance is to update & read search indexes. Solr Slave can only be used to read indexes, not for update. Additionally Slave periodically polls Master for latest version of index, if there are any, new indexes are replicated from Solr Master to Slave. What will happen if we try to update the index directly on Slave? Sitecore will be able to do it, but the indexes will became out-of-sync, Solr will have different version of it on each instance, cause it’s not possible to replicate indexes from Slave to Master.

This practically means that we can only have one Solr instance where Sitecore can update search indexes, so the fail-over will be only partial. We can ensure that index reading is fail-tolerant (load balancer will direct the traffic to Slave in case Master is down), but we can’t, without custom solutions, provide fail-tolerant search index update (for example, if Master is down, our Sitecore website will still work including content search, but content created after the failure, will not be reflected in search index = not be searchable). We can have multiple Solr Slave servers which additional improve the performance.

There is one important piece in this setup: load balancer. We need to configure it to direct all read traffic (HTTP GET requests) to all Solr servers and all index update traffic (HTTP POST requests) only to the Solr Master.

Solr Master Index Replication Configuration

To configure index replication on Solr Master we only need a simple change in solrconfig.xml (it’s placed in every index definition folder, eg \server\solr\sitecore_master_index\conf\) by editing following lines:

|

1 2 3 4 5 6 7 8 |

<requestHandler name="/replication" class="solr.ReplicationHandler" > <lst name="master"> <str name="replicateAfter">startup</str> <str name="replicateAfter">commit</str> <str name="confFiles">schema.xml,stopwords.txt,elevate.xml</str> <str name="commitReserveDuration">00:00:10</str> </lst> </requestHandler> |

We need to repeat this step for all our indexes.

Solr Slave Index Replication Configuration

To configure index replication on Solr Slave we again change only solrconfig.xml. Again we repeat this step for all indexes on Slave server:

|

1 2 3 4 5 6 |

<requestHandler name="/replication" class="solr.ReplicationHandler" > <lst name="slave"> <str name="masterUrl">http://smartsitecore-solr-master:8983/solr/${solr.core.name}</str> <str name="pollInterval">00:00:20</str> </lst> </requestHandler> |

Connect Sitecore to Load Balancer and Test

We update Solr address in Sitecore by writing simple patch:

|

1 2 3 4 5 6 7 8 9 |

<configuration xmlns:patch="http://www.sitecore.net/xmlconfig/"> <sitecore> <settings> <setting name="ContentSearch.Solr.ServiceBaseAddress"> <patch:attribute name="value">http://smartsitecore-solr:8983/solr</patch:attribute> </setting> </settings> </sitecore> </configuration> |

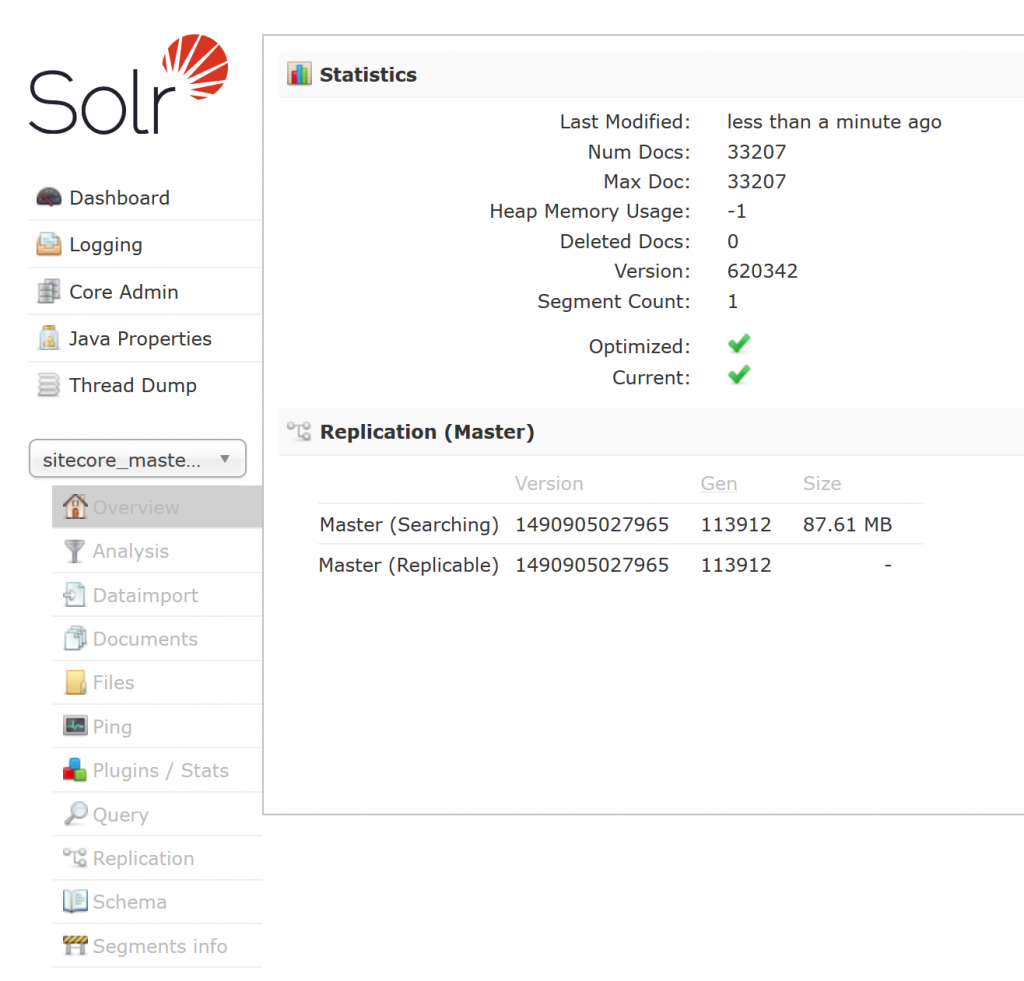

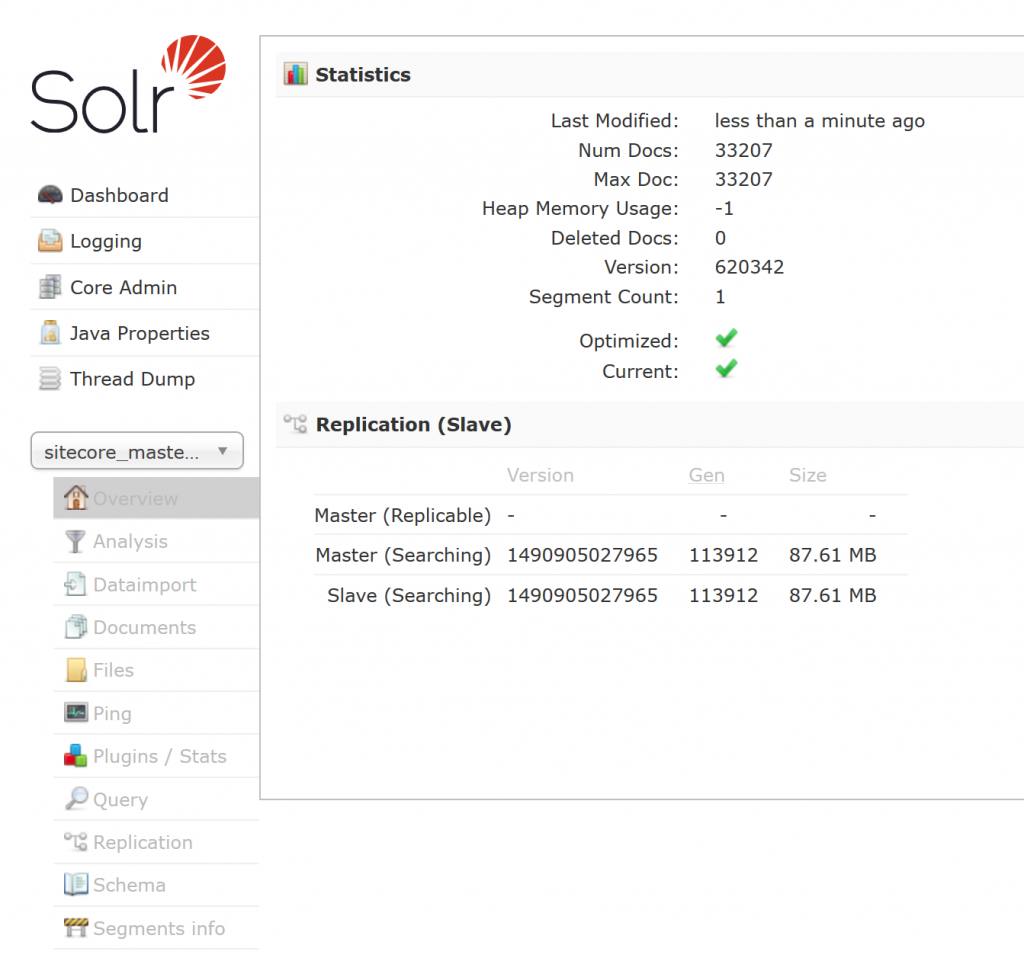

The address should point to our Load Balancer. Now we are ready to test the solution, we can rebuild search indexes in Sitecore’s Control Panel and the login to Admin console on both Master and Slave servers. We should see the same version for our Sitecore indexes on both Master:

and on Solr Slave instance:

Sitecore and SolrCloud

SolrCloud is more modern way to create high performance and highly available Solr cluster. It simplifies some manual task needed to build index replication and sharding.

- Index replication: copying whole index to other Solr instance. Used to improve the performance and availability.

- Index sharding: dividing search index into multiple Solr instances. Used to improve performance, if index is too large to be handled by a single machine.

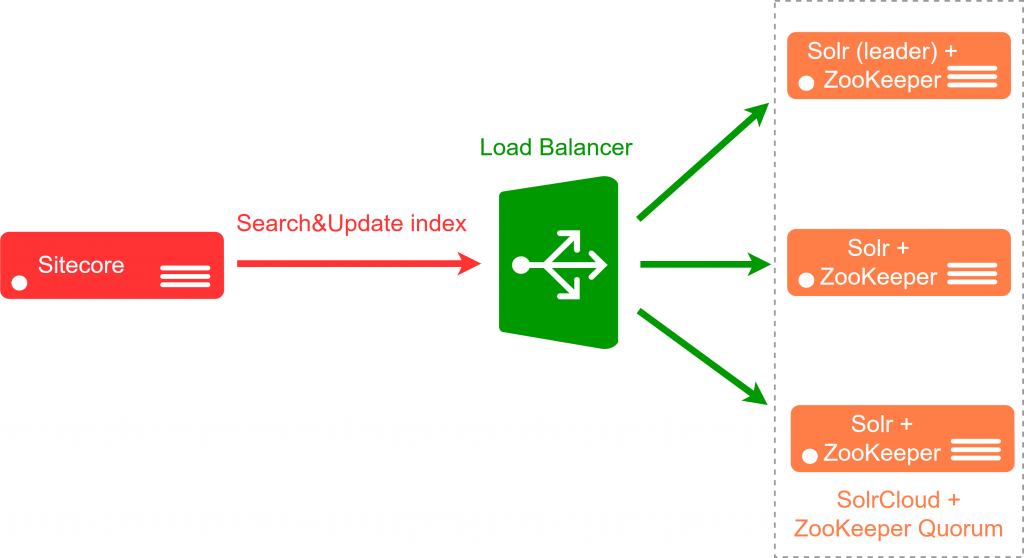

SolrCloud also gives automatic fail-over for both index search (by splitting queries between multiple Solr instances) as well as for index update. Updating the index is executed on one of the Solr node in the cluster, called the leader. SolrCloud uses additional ZooKeeper nodes to configure and monitor the state of the cluster and nominate new Solr Leader in case current one fails. Because this is the only role of ZooKeeper, it doesn’t need too powerful machines.

ZooKeeper itself also need more than one machine to handle failures. The official guide says “To create a deployment that can tolerate the failure of F machines, you should count on deploying 2xF+1 machines”. So basically to handle one failure we need minimum 3 ZooKeeper instances.

In ideal scenario ZooKeeper should be install on separate machines, but to reduce number of servers, it can be installed on same machines as Solr (but it is suggested to place it on different hard disks), so overall architecture will look like:

Again the only change needed in Sitecore is to point to Load Balancer in “ContentSearch.Solr.ServiceBaseAddress”. If you want to learn more about it, I encourage you to check out great post series about configuring SolrCloud created by Chris Sulham:

http://www.chrissulham.com/sitecore-on-solr-cloud-part-1

Pingback: Sitecore – howtos and useful links – Angel's Place()